Research has found that holding teachers accountable to the local community has scant impact on student learning. Based on a survey of government schools in Karnataka, this column suggests that this need not signal a failure of local accountability. Rather, the issue is that schools are held accountable for student performance on tests that teachers themselves design and administer, and which do not adequately capture learning.

Low learning levels in India’s government schools are most frequently blamed on the lack of accountability of teachers and school administrators in a system that has historically been highly centralised. To address this, successive governments have advocated the decentralisation of schools, requiring the formation of School Development and Management Committees (SDMCs). These committees, comprising parents and members of the local community, are vested with the responsibility of overseeing teachers and ensuring learning. However, available evidence from India and other countries suggests that this transition to a system in which teachers are accountable to the local community has had scant impact on learning (Blimpo and Evans 2011, Pradhan et al. 2011).

These disappointing research results need not signal a failure of local accountability. Accountability systems give schools incentives to improve scores only along the dimensions on which they are evaluated; they need not result in improvements in general skills or skill sets other than those for which schools are accountable. Thus, the use of one set of tests may find accountability institutions to be successful, while evaluations based on a different set of test scores may suggest otherwise. In Texas schools, for example, Klein et al. (2000) demonstrated that accountability resulted in improvement in the test scores that schools were evaluated on (the Texas Assessment of Academic Skills (TAAS)), but not in scores on other comparable tests (National Assessment of Educational Progress (NAEP)). This difference between achievement in ‘high-stakes’ versus ‘low-stakes’ tests has also been noted by other researchers (Figlio and Rouse 2006, Jacob 2005).

This suggests the importance of defining in an appropriate manner, the learning standards to which schools and teachers will be held accountable; to ensure an effect on learning, accountability must go hand-in-hand with attention to proper assessment of student learning. However, the link between accountability and assessment in developing economies has not received the attention it deserves. Though the Right to Education (RTE) Act of 2009 emphasises the importance of holding schools and teachers accountable for improving learning, it provides little guidance on how to assess school performance. On the contrary, the policy eliminates any centralised testing of students during elementary school, entirely leaving assessment to schools and teachers (through a system referred to as “continuous and comprehensive” evaluation). Schools typically do administer tests to students throughout the academic year, but these tests are not publicly scrutinised and hence may not test students on the competencies stipulated by the State . Consequently a student may perform well on school tests, but poorly on a test that more closely reflects State standards. If parents and members of SDMCs only have access to student scores on school test, improvements on these scores may lead them to erroneously conclude that learning standards are rising.

Teacher accountability and student learning levels in Karnataka schools

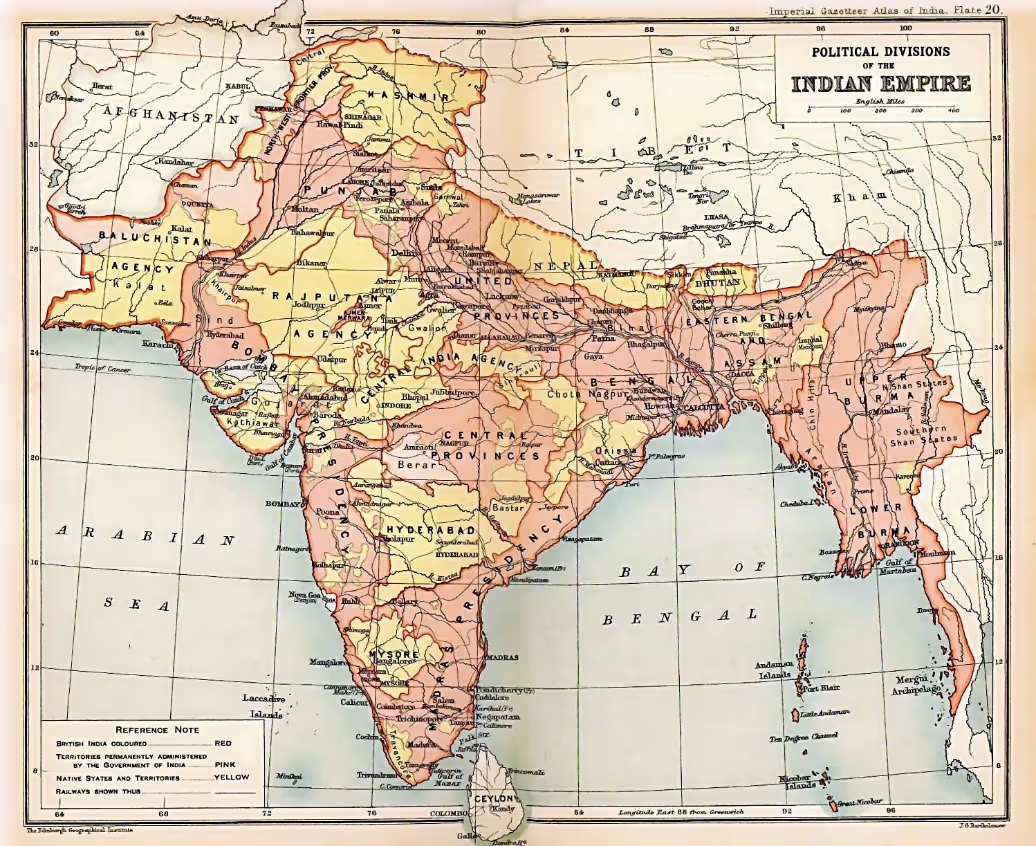

In recent research, I along with my co-authors suggest that the insignificant effect on learning of programmes intended to strengthen school management committees, documented in recent evaluation studies (Blimpo and Evans 2011, Pradhan et al 2011), may reflect differences in the tests used by researchers to evaluate the programme in question and those that are used by SDMCs and other state-level administrators to monitor schools (Gowda, Kochar, Nagabhushana and Raghunathan 2014). We examine this issue by analysing data from a recent (2013) survey of approximately 700 government schools in 12 districts of rural Karnataka. This research was conducted as part of a Randomised Control Trial (RCT) evaluating a programme implemented by the Non-Governmental Organisation (NGO), Prajayatna, to strengthen SDMCs and hence, local accountability of teachers. In ‘treatment’ schools , Prajayatna undertook training sessions of SDMC members during monthly meetings and specifically worked to put in place systems that would enhance the accountability of teachers to the SDMC. All teachers in treatment schools were provided with an “accountability tool” that tracked student performance in each grade, based on school tests. Teachers were required to present this tool to SDMC members in the monthly meetings, so that the community would be well informed of progress in learning (as captured by school test scores). If there was evidence of a sustained failure to improve, Prajayatna facilitated a discussion on possible impediments and steps that could be taken to improve the situation. Our evaluation of the programme revealed that it did succeed in improving the quality of SDMCs, on the basis of a “management” score that evaluated SDMC members’ knowledge of their functions and the extent to which they implemented their responsibilities. However, despite this, the effect on learning, as judged by their performance on the tests implemented by our survey team , was weak.

To test whether this reflected a difference in our survey scores and the school scores that are reported back to SDMC members and that teachers are accountable for, we compared each student’s performance on our survey tests with that revealed in school tests. We found a significant difference between these two test results: school test scores significantly exceed survey test scores, with the average school score on language being 66.7 compared to a survey score of 37.1; average school and survey scores for mathematics are 63.4 and 37.1 respectively.

It may still be the case that both tests identify the same set of ‘good’ and ‘weak’ students; those who score well in survey tests may also score well in school tests, so that both tests are equivalent in identifying ability differences across students. If so, the two scores should be closely correlated. Comparing these two sets of scores for the same set of students (those in grade 5 in 2012-13), the correlation in language scores is 0.41. However, the correlation in math scores is much weaker (0.25)1. We find that a significant proportion of students who place in the bottom quartile2 in terms of the survey test scores score well on school tests. And, correspondingly, many students who place in the top quartile of survey scores perform poorly in school tests.

Why didn’t greater teacher accountability improve student learning levels?

In contrast to the weak effect of the programme on survey tests, we found a significant effect on school test scores. However, the improvement was concentrated amongst the weakest students, mirroring evidence from the US that increased accountability of teachers generally results in improvement in ‘high-stakes’ test scores for the weakest students. In contrast, scores from the survey tests we implemented record the largest improvements amongst the best students.

The differential effect of the programme on school and survey test scores could be caused by a number of factors. We support the hypothesis that it reflects the effect of accountability by also examining the effect of an alternative accountability arrangement that operate through a more centralised mechanism - the organisation of schools across villages into clusters that are supervised by a Cluster Resource Person (CRP). Like the SDMC members, the CRP can only judge school and teacher performance by school test scores. We find that the number of school visits by the CRP increases school scores, but has no significant effect on survey scores3.

The significant effect of the programme on school scores, but not on survey scores, could be a consequence of differences in the set of skills being tested in the two tests. An alternative interpretation is that, as has been found in the US literature (Jacob and Levitt 2003), teachers respond to the pressure of accountability by simply inflating reported school test scores, without any attempt to ensure improved learning. While we cannot directly test these alternative hypotheses, we provide information that supports the latter. Specifically, we show that while the survey test scores respond to known determinants of school quality in the expected way, school scores do not. For example, higher pupil-teacher ratios reduce our survey scores, but not school scores, suggesting that our survey scores reflect ‘real’ learning more closely.

We also show that parents are more greatly affected in making decisions that affect their children’s schooling outcomes, by school scores rather than by survey scores. This is natural; in countries characterised by low levels of adult literacy, parents’ assessments of their children’s learning will come primarily from the information provided by teachers. This has several implications. First, recorded improvements in school scores may reduce the pressure placed by parents, local institutions and even higher level institutions on schools and teachers to improve. Thus, the absence of accurate information at the local level on learning standards may be a significant determinant of the low levels of learning discerned in tests such as ASER (Annual Status of Education Report). Second, any misinformation contained in school tests may be reinforced by parent behaviour. In our research paper, we show that students from Scheduled Castes and Tribes (SC/ STs) generally do poorly in school tests, relative to students from forward castes, though this gap is far less significant in our own survey tests. Based on the information contained in school tests, parents from scheduled castes and tribes may erroneously believe that their children are not learning, and hence may end up withdrawing them from school.

Our results suggest that promoting institutions to enhance teacher accountability will not enhance learning unless the outcomes that teachers are accountable for are well defined and their association with learning outcomes well established. Additionally, to minimise the possibility of spurious test scores, attention has to be paid to the administration of tests. In the current system in place in India, whereby progress through elementary school is essentially not evaluated by any external tests, and where the test scores reported by teachers are generally not scrutinised, it is quite possible to have improvements in accountability without any effect on learning.

Notes:

- In this context, correlation measures how the two test scores (survey and school) move in relation to each other. A correlation value between 0 and 1 implies that if one set of scores increases, the other increases as well. Higher the value of correlation (closer to 1), the stronger is this co-movement.

- The bottom quartile, in this context, refers to the 25th percentile of scores, which is the value of the test score below which 25% of test scores lie. The bottom quartile of students is the set of students that have these scores. Similarly, the top quartile is the 75th percentile, which is the value of test score above which 25% of test scores lie. The top quartile of students is the set of students that have these scores.

- This was assessed by a regression analysis of the effect of the number of visits of the Cluster Resource Person on school and survey test scores.

Further Reading

- Blimpo, MP and DK Evans (2011), ‘School-based management and Educational Outcomes: Lessons from a Randomized Field Experiment’, Manuscript, Stanford University.

- Figlio, D and CE Rouse (2006), “Do Accountability and Voucher Threats Improve Low-Performing Schools?”, Journal of Public Economics 90(1-2): 239-255.

- Gowda, K, A Kochar, CS Nagabhushana and N Raghunathan (2014), ‘Evaluating Institutional Change through Randomized Controlled Trials: Evidence from School Decentralization in India’, Manuscript, Stanford University.

- Jacob, BA (2005), “Accountability, Incentives and Behavior: The Impact of High-Stakes Testing in the Chicago Public Schools”, Journal of Public Economics 89(5-6): 761-796.

- Jacob, BA and SD Levitt (2003), “Rotten Applies: An Investigation of the Prevalence and Predictions of Teacher Cheating”, Quarterly Journal of Economics 11893): 843-878.

- Klein, S, L Hamilton, D McCaffrey and B Stecher (2000), “What do Test Scores in Texas Tell Us?”, Education Policy Analysis Archives 9(49).

- Pradhan, M, D Suryadarma, A Beatty, M Wong, A Alishjabana, A Gaduh and RP Artha (2011), ‘Improving Educational Quality Through Enhancing Community Participation’, World Bank, Washington D.C.

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

%201.svg)

.svg)

.svg)